AI Without Compromise: Power, Privacy, and Decentralized Intelligence

Jul 23, 2025

9 min Read

TL;DR:

AI Today: Powerful models rely on centralized cloud servers, making them invasive and inaccessible.

The Tradeoff: Users must choose between AI that’s strong but invasive, or private but weak.

Why Local AI Struggles: Large models require huge computational resources, making them hard to run on personal devices.

Decentralized AI as a Solution: Networks like Bittensor, Gensyn, and Fetch.ai distribute AI workloads across multiple devices.

Pipeline Parallel Inference: Splitting AI models across consumer hardware allows large-scale AI without cloud dependence.

Cluster Protocol & EXO Labs: Building frameworks for decentralized AI execution and training.

Why This Matters: AI should be owned, private, and accessible, not locked behind corporate APIs.

The Future: AI that is scalable, personal, and entirely user-controlled.

The AI Dilemma: Power Without Privacy

AI is getting smarter, more integrated, and harder to live without. From writing our emails, schedules our meetings, generates our code, and even recommends what we should watch or buy next. But every AI assistant, chatbot, or automation tool we use comes with an invisible tradeoff: privacy.

The most powerful AI models need deep access to personal data to work properly. They function best when they can see our emails, messages, calendars, and browsing history. But handing over that level of access to a black-box system owned by a corporation? That’s a hard pill to swallow.

The reality is, today’s AI is either cloud-based and invasive or local and weak, forcing users to pick between power and privacy.

Why Can’t We Have Both?

The reason AI is locked behind cloud servers isn’t about control but it’s about scale.

Models are enormous, requiring clusters of GPUs for both training and inference outputs. Running them on personal devices is unimaginable, leaving cloud-based services as the only viable option.

But what if we could keep full control over our AI, running large-scale models on our own hardware, without sacrificing performance?

That’s the next frontier: AI that is private, scalable, and entirely user-owned.

The AI Trust Problem: Why AI Feels Untrustworthy

AI is supposed to make life easier by automating tasks, streamlining workflows, and even making intelligent decisions. But despite its rapid progress, most people hesitate to rely on AI for anything deeply personal.

Why? Because AI, as it exists today, demands trust without offering real guarantees in return.

Who wants to hand over their private data to a black-box system owned by a corporation?

Three Core Issues That Undermine Trust:

1️⃣ Data Privacy

AI models today are mostly cloud-based, meaning user data must be sent to external servers for processing.

Once uploaded, this data is out of the user’s control: stored, analyzed, and sometimes even monetized without clear transparency.

This creates a dilemma: either accept AI’s full potential at the cost of privacy or limit AI’s capabilities to keep data secure.

2️⃣ Security Risks

Cloud-based AI introduces new attack vectors. The more personal data is stored remotely, the more valuable it becomes to hackers.

AI providers are attractive targets for breaches, making it nearly impossible to guarantee that sensitive information will remain safe.

The centralized nature of current AI systems means a single vulnerability could expose millions of users at once.

3️⃣ Lack of Control

The most powerful AI models belong to corporations, and users have little say in how they operate.

AI companies dictate updates, pricing, and access, meaning users can lose features, or even access, at any time.

AI should feel like a personal tool, but instead, it often feels like a service that users must rent without real ownership.

What is needed is AI that is both powerful and private, but today’s landscape forces them to choose between the two.

The Tradeoff Between Power and Privacy

Even if users were comfortable sharing personal information, they would still face another major dilemma: AI’s power comes at a cost, and right now, that cost is either privacy or accessibility.

The most advanced AI models demand enormous computational resources, making them impractical for personal devices.

Training and running these models require specialized hardware, often only available in corporate data centers.

This is why cloud-based AI has become the norm, it offloads the compute burden but, in doing so, forces users to trade privacy for convenience. Every request is processed on remote servers, meaning AI doesn’t truly belong to the user. It’s borrowed.

On the other hand, local AI offers control but at the expense of capability. Smaller models can run on personal hardware, but they struggle with complex reasoning, limiting their usefulness. The result? AI remains either a service that must be rented or an expensive luxury, when it should be a personal tool, fully owned and controlled by the user.

The Hardware Challenge: Why Running AI Locally Is So Hard

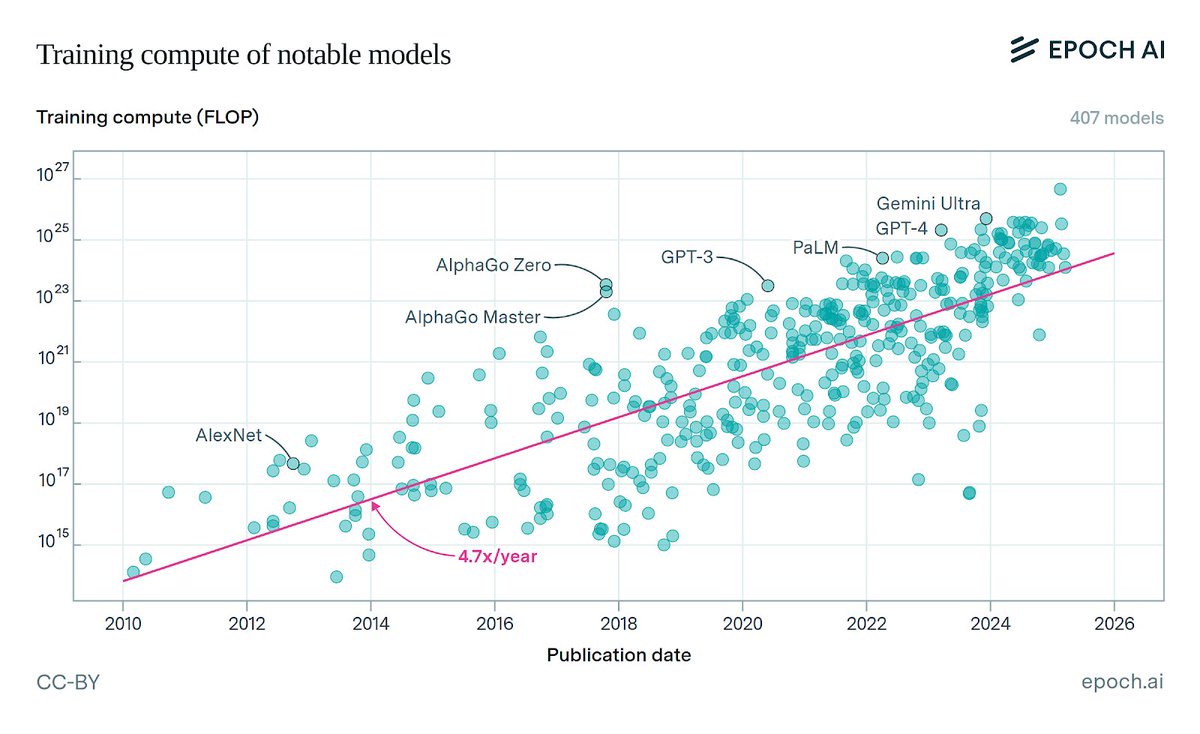

The limitations of local AI aren’t just about software but they’re about raw computational power. The AI models that power modern assistants, recommendation systems, and automation tools are massive, often requiring thousands of high-end GPUs to function efficiently.

This level of compute is far beyond what an average user can afford, making personal AI ownership unrealistic.

Centralized providers invest in massive data centers, optimizing infrastructure to reduce costs and maximize efficiency. This makes cloud AI accessible, but at a price: users don’t own their models, they rent access to them.

Every interaction is processed externally, reinforcing the dependency on corporations that control both the hardware and the intelligence.

The alternative is distributed inference by breaking AI models into smaller components and spreading workloads across multiple devices. Instead of relying on a single high-powered machine, AI can leverage CPUs, GPUs, and even networked consumer hardware to operate efficiently at scale.

This approach has the potential to break AI’s reliance on cloud providers, making personal AI a reality without sacrificing performance. But it also introduces new technical challenges, requiring novel ways to coordinate, optimize, and distribute workloads across diverse hardware environments.

Decentralized AI and Edge Computing

Cloud-based AI has long been the standard for handling massive workloads, but its reliance on centralized infrastructure comes at a cost of privacy trade-offs, loss of control, and restricted access. To break free from this dependency, AI needs a new architecture: One that distributes computation across networks instead of relying on corporate data centers.

Projects like Bittensor are leading the shift toward decentralized AI, where models run across multiple machines or directly on edge devices. By leveraging blockchain and distributed computing, these networks enable large-scale AI without a single point of control. Resulting in a more resilient, censorship-resistant AI ecosystem that keeps data private and computation decentralized: giving power back to users, not corporations.

Pipeline Parallel Inference

To run powerful AI models without cloud dependence, AI workloads need to be efficiently distributed across available hardware. Pipeline parallel inference enables this by “sharding” models splitting them into smaller components that run simultaneously across different devices. Instead of requiring expensive, high-end GPUs, this method spreads computation across consumer-grade hardware, from personal computers to networked devices.

By leveraging multiple computing resources, pipeline parallel inference removes the traditional bottlenecks of local AI execution. It allows AI to scale dynamically, improving performance as more devices join the network. More importantly, it keeps personal data within the user’s control, ensuring privacy while maintaining the power of large-scale AI.

Benefits of Pipeline Parallel Inference:

✅ Scalability – Compute power increases as more devices contribute, enabling larger models to run efficiently.

✅ Cost-Efficiency – Eliminates the need for expensive, dedicated AI hardware by utilizing existing consumer devices.

✅ Privacy – AI models execute locally without transmitting sensitive data to centralized servers, preserving user autonomy.

*AI That Can Take Action*

Traditional AI models are limited to text generation. They provide responses but don’t interact with the real world. The shift from passive AI to active AI comes with function calling: the ability for models to execute tasks beyond simple conversation. Instead of just answering questions, AI can now retrieve live data, control devices, and automate actions in real time.

AI That Can Perform Real-World Actions

• Fetch live weather updates.

• Manage calendar events and automate scheduling.

• Control smart home devices.

• Automate messaging and email responses.

EXO Labs: Advancing Decentralized AI Execution

EXO Labs is developing a framework for running AI on distributed consumer hardware, reducing reliance on centralized cloud providers. By using techniques like Pipeline Parallel Inference and DiLoCo (Distributed Local Compute), EXO enables more efficient and private AI processing.

Decentralized AI Execution: EXO’s approach distributes AI workloads across multiple devices, making computation more accessible and resilient.

Pipeline Parallel Inference: Instead of processing entire models on a single machine, AI tasks are split across different devices, improving efficiency.

DiLoCo for AI Training: EXO is researching ways to train AI using distributed local compute, keeping data private and reducing dependence on centralized infrastructure.

Why This Matters

- AI becomes scalable, private, and user-controlled.

- Reduces reliance on cloud-based AI.

- Enables personal AI ownership rather than AI-as-a-service.

- By decentralizing AI execution, EXO Labs is helping to create a more secure and user-driven AI ecosystem.

The Path Forward: AI as a Personal Tool, Not a Rented Service

AI should be as private and user-controlled as a smartphone, not something locked behind corporate APIs and paywalls. Today, most AI models run on centralized cloud servers, making users dependent on third-party providers. Decentralized execution and training offer a way out, allowing AI to operate directly on personal devices or distributed networks.

It’s about ensuring ownership and control remain with the user. The future of AI isn’t a service you rent; it’s a tool you own, shaping a world where intelligence is both accessible and independent.

Cluster Protocol: Bridging Power and Privacy

Cluster Protocol addresses this core dilemma of AI, balancing power with privacy, by enabling decentralized AI execution. Unlike traditional cloud-based models that demand user data for centralized processing, Cluster leverages distributed computing to ensure that AI workloads are spread across multiple devices.

This not only enhances scalability and cost-efficiency but also keeps sensitive data local, preserving user autonomy. By supporting decentralized datasets and collaborative model training, Cluster reduces reliance on corporate-controlled infrastructures, democratizing access to AI development.

Its modular framework empowers users to create intelligent agents tailored to specific needs, transforming AI into a personal tool rather than a rented service.

Intelligent Agent Workflows: Cluster facilitates the creation of intelligent agents that can autonomously perform actions based on predefined rules and real-time data, enhancing productivity and efficiency in daily tasks.

AI That Belongs to You

AI is more capable than ever, yet still trapped behind centralized platforms that demand trust without offering real control. Today’s users are forced to choose between power and privacy, but that tradeoff shouldn’t exist.

Frameworks such as Cluster and EXO Labs demonstrate this shift. EXO, for example, lets you unify everyday devices-phones, laptops, even Raspberry Pis-into a distributed AI cluster that runs large models locally, without specialized hardware or a central server. EXO uses dynamic model partitioning and peer-to-peer connections, so each device contributes its resources, and models are split optimally across the network. This means you can run state-of-the-art AI on hardware you own, with all computation and data staying local, ensuring privacy and autonomy.

The future of AI isn’t something you rent, it’s something you own. It is not about renting intelligence from a platform-it’s about owning AI that runs for you, on your terms, with privacy and transparency by design.

About Cluster Protocol

Cluster Protocol is making intelligence co-ordinated, co-owned & trustless.

Our infrastructure is built on two synergistic pillars that define the future of decentralized AI:

Orchestration Infrastructure: A permissionless, decentralized environment where hosting is distributed, data remains self-sovereign, and execution is trust-minimized. This layer ensures that AI workloads are not only scalable and composable but also free from centralized control.

Tooling Infrastructure: A suite of accessible, intuitive tools designed to empower both developers and non-developers. Whether building AI applications, decentralized AI dApps, or autonomous agents, users can seamlessly engage with the protocol, lowering barriers to entry while maintaining robust functionality.

🌐 Cluster Protocol’s Official Links: