Brain vs Bots: Understanding AI Architecture Through the Lens of Human Cognition

Nov 26, 2024

12 min Read

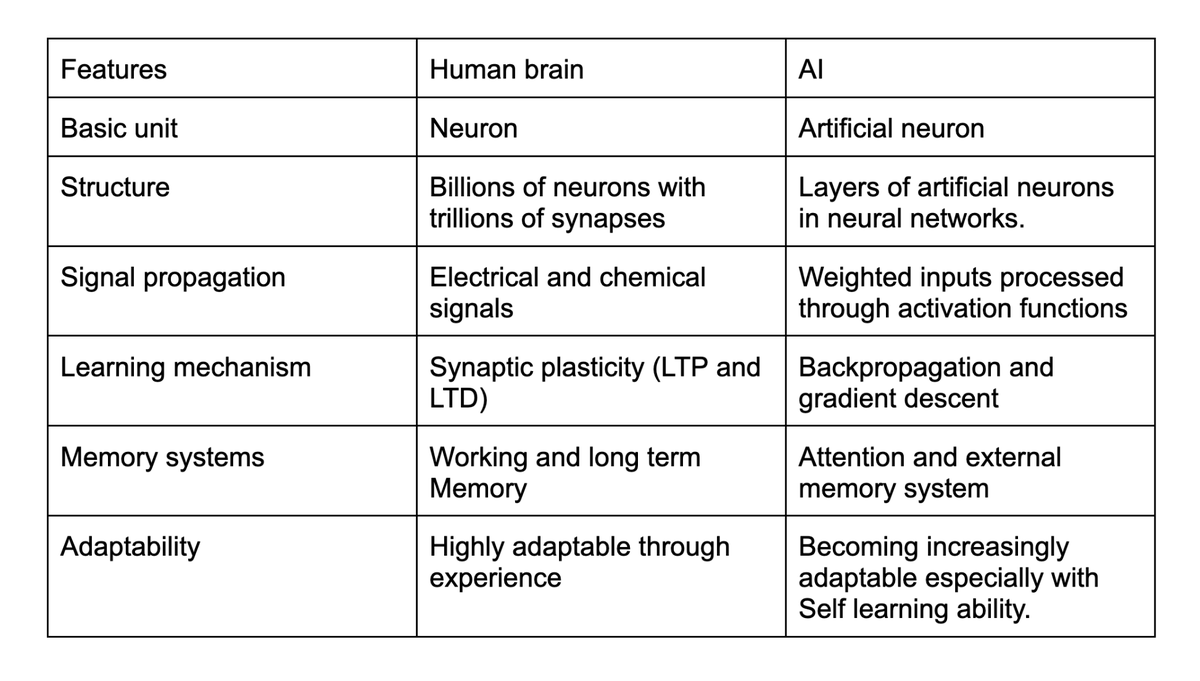

The human brain is the most complex machine known, capable of astonishing reasoning and memory, which has enabled humans to survive and even dominate alongside fiercer, more physically powerful species over millions of years. This intricate organ, composed of roughly 86 billion neurons and trillions of synaptic connections, operates continuously and harmoniously, day and night, without rest.

Our brain’s unique capacity for thought, problem-solving, and creativity has driven us to automate tasks and seek efficiency wherever possible. This natural inclination to simplify and optimize has spurred the development of artificial intelligence, a field that aspires to replicate- at least in part- the extraordinary capabilities of human cognition. Today, in the 21st century, researchers are in a global race to achieve Artificial General Intelligence (AGI), an AI that could match the full breadth of human abilities.

The human brain’s complexity and adaptability serve as both the inspiration and the ultimate benchmark for AI. While popular opinion often suggests that AI “thinks like the brain,” the reality is more nuanced and compelling. This deep dive will explore the architectural parallels between modern AI systems, particularly Large Language Models (LLMs), and the human brain, shedding light on both striking similarities and fundamental differences. It also examines why, out of all the wonders of nature, AI draws most heavily from the brain’s design for inspiration.

Brain vs Bots

This blog will explore and compare the comprehension units of the human brain and artificial intelligence (AI) models, particularly large language models (LLMs). We will examine their basic structures, functionality, and highlight the similarities and differences between the two.

The Foundation - Neural Architecture

BRAIN

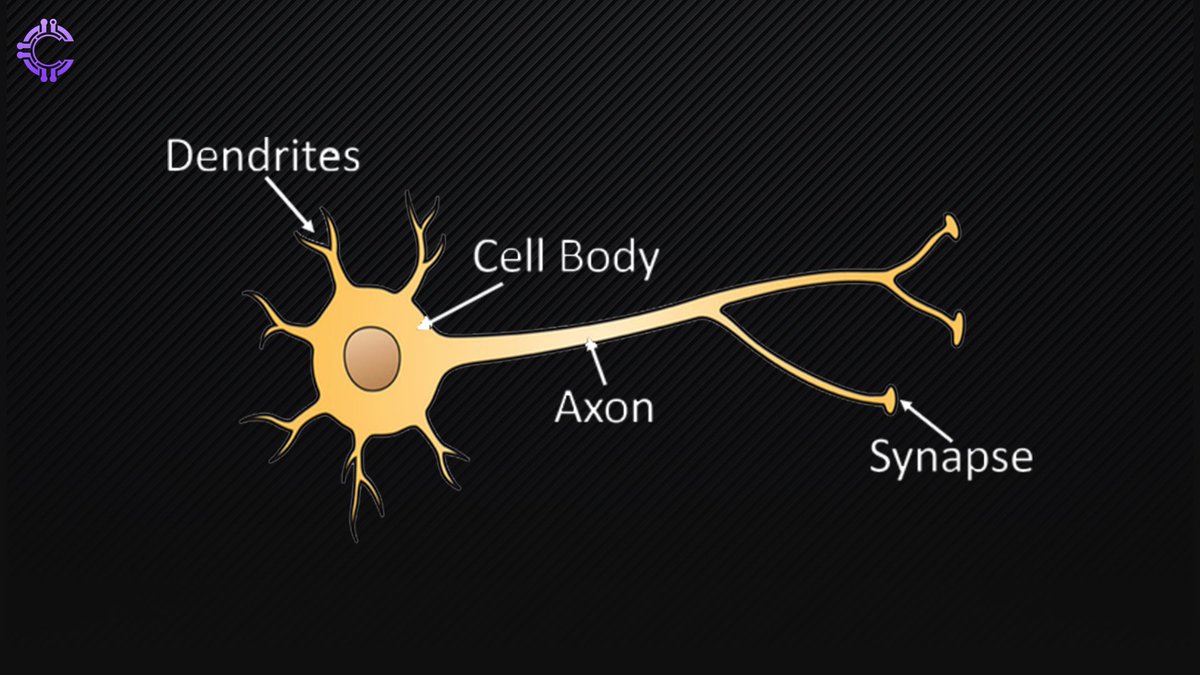

At the core of the brain’s remarkable computing power is the neuron—a specialized cell that forms the foundation of all thoughts, actions, and sensations. Each neuron acts as a tiny, sophisticated processor, transmitting information through intricate electrical and chemical signaling pathways that power the entire nervous system.

The Four Key Components

Dendrites - The Signal Collectors - Branch out like tree limbs to receive signals from thousands of neighboring neurons. Sensitive to chemical signals, these “antennas” play a critical role in gathering information.

Soma - The Command Center - Contains the nucleus and vital machinery that process incoming signals. Integrates information and makes the essential “fire or don’t fire” decision, initiating communication.

Axon - The Information Highway - A long, slender fiber that transmits signals, sometimes spanning up to a meter. Often insulated by myelin, allowing rapid, efficient signal travel akin to a high-speed cable.

Synapses - The Communication Junctions - Connections where neurons exchange information by releasing neurotransmitters. Act as bridges that facilitate complex networks, enabling higher-order processing.

The Network Architecture

The brain’s neural network is organized in sophisticated layers and patterns that support complex processing. In the neocortex alone, there are six distinct layers, each responsible for specialized functions. Neurons form feedback loops, allowing recursive processing that supports advanced cognition. The brain also relies on parallel processing, with multiple pathways operating simultaneously to maximize efficiency and responsiveness. Specialized regions handle specific tasks- such as language, movement, and memory—yet remain interconnected, enabling seamless coordination and integration across different types of information. This intricate network architecture creates a balanced system, capable of everything from reflexive actions to complex reasoning and creativity.

This system of approximately 86 billion neurons and trillions of connections allows for everything from survival instincts to abstract thinking. Nature has crafted an architectural masterpiece—both specialized and adaptable—that powers our experience of consciousness, creativity, and complex thought, setting the bar for all artificial intelligence models to obtain.

BOTS

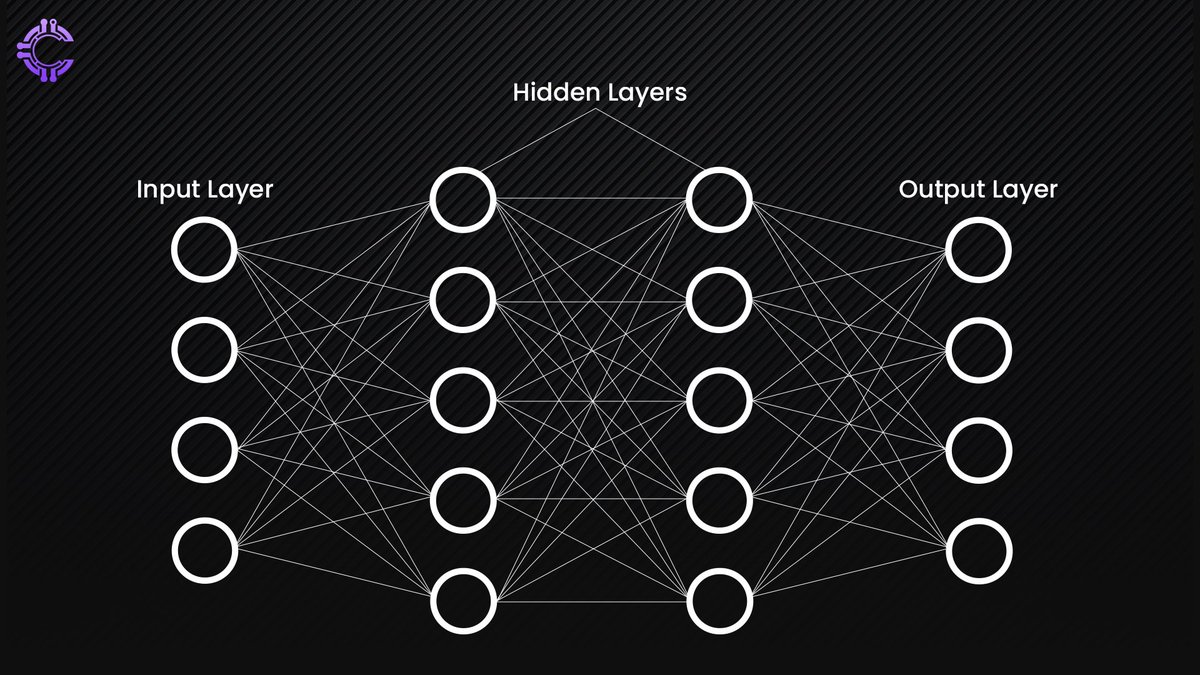

In a remarkable feat of biomimicry, artificial neural networks have been designed to replicate the brain's architecture with astounding similarity. These artificial systems take inspiration from nature's blueprint while adapting it for computational efficiency.

At the core lies the artificial neuron, a mathematical model that mirrors its biological counterpart. Just as biological neurons receive signals through dendrites, artificial neurons accept inputs through weighted connections. Each input is multiplied by a weight value, representing the connection's strength – similar to how synapses in the brain can be strong or weak. These weighted inputs are then summed and passed through an activation function, which decides whether and how strongly the artificial neuron should "fire," much like the soma's decision-making process in biological neurons.

The network architecture itself showcases ingenious parallels to brain structure. Hidden layers in artificial networks function similarly to the brain's cortical layers, processing information at increasing levels of abstraction. Modern networks often incorporate skip connections, allowing information to bypass certain layers – much like the brain's direct neural pathways that enable rapid response to critical stimuli. Perhaps most fascinating is the implementation of attention mechanisms, which mimic our brain's ability to focus on relevant information while filtering out noise.

Just as different brain regions specialize in specific tasks, modern AI systems often employ specialized network architectures. Convolutional Neural Networks excel at visual processing, while Recurrent Neural Networks handle sequential data – each architecture optimized for its particular function, much like specialized regions in the biological brain.

Information Processing - The Dance of Signals

BRAIN

Inside our brains, an intricate dance of electrical and chemical signals orchestrates every thought, memory, and action. This sophisticated information processing system operates through two main mechanisms: signal propagation and learning adaptation.

Signal Propagation

When neurons communicate, they generate action potentials - electrical signals that race along neural pathways like tiny lightning bolts. These electrical messages trigger the release of neurotransmitters at synapses, chemical messengers that bridge the gap between neurons.

Each neurotransmitter has a specific role: dopamine signals reward, serotonin regulates mood, glutamate excites, and GABA inhibits.

[Neuron A] --(Action Potential)--> [Synapse] --(Neurotransmitter)--> [Neuron B]

As neurons receive thousands of inputs simultaneously, they perform synaptic integration - weighing both excitatory and inhibitory signals to decide whether to fire. This process includes temporal summation, where signals are integrated over time, allowing for complex information processing.

Learning Mechanisms

The brain's ability to learn relies on Hebbian learning - "neurons that fire together, wire together." When neurons activate together repeatedly, their connections strengthen through synaptic plasticity. This process manifests in two ways:

- Long-term potentiation (LTP): strengthening connections to form memories

- Long-term depression (LTD): weakening unused connections

Over time, the brain refines its networks through pruning (removing rarely used connections) and reinforcement (strengthening frequently used pathways). This continuous optimization creates efficient neural networks shaped by our experiences, enabling both stability and adaptability - the hallmark of our brain's remarkable processing power.

In simple terms - The brain optimizes learning by strengthening connections for things we care about and weakening those we don’t use.

BOTS

Artificial Processing

An artificial neural network functions similarly to a digital brain, designed to recognize patterns and learn from experience.

Signal Propagation

The journey begins with forward propagation, where input data is passed through the network’s layers. Each neuron receives inputs, computes a weighted sum, and applies an activation function (e.g., ReLU or sigmoid) to determine its output. This process continues through hidden layers until the final output layer generates predictions. The effectiveness of signal propagation is crucial; if signals can traverse the network efficiently, it enhances the model’s ability to learn and generalize from data.

Learning mechanisms

Learning occurs via backpropagation, an algorithm that adjusts the network’s weights based on the error between predicted and actual outputs. After forward propagation, the network calculates a loss value, which indicates how far off its predictions are. Backpropagation then sends this error backward through the network, using gradient descent to update weights iteratively, minimizing loss over time . This dual mechanism of signal propagation and learning enables neural networks to refine their performance and adapt to new data effectively.

Memory Systems - Storage and Retrieval

Biological Memory

The human brain is a remarkable organ, often likened to a sophisticated computer, but its memory systems are far more intricate and fascinating. Imagine juggling several balls in the air while trying to remember the lyrics to your favorite song—this is akin to how our memory functions! The brain employs various memory systems that help us navigate daily life, from recalling past experiences to mastering new skills.

Working Memory

•Capacity: Limited to 7±2 items.

•Function: Temporarily holds and actively manipulates information.

•Dependence: Requires attention for effective processing.

Long-Term Memory

Long-term memory is further categorized into:

•Episodic Memory: Personal experiences and events.

•Semantic Memory: Facts and general knowledge.

•Procedural Memory: Skills and tasks learned through practice.

Memory Formation Process

1. Encoding: Initial processing of information into a format suitable for storage.

2. Consolidation: Stabilization of memories, often enhanced during sleep and through repetition.

3. Retrieval: Accessing stored memories when needed.

4. Reconsolidation: Reactivating and potentially modifying memories during retrieval.

These systems work together to ensure that information is effectively stored and recalled, allowing individuals to learn from experiences and apply knowledge in various contexts.

Artificial Memory Systems

LLM models use memory mechanisms that resemble human thinking. Imagine a librarian who not only knows every book but can also find the right one based on your vague hints—this is similar to how LLMs use attention memory.

In attention memory, self-attention layers highlight important words, while cross-attention connects different ideas like a detective solving a mystery. Key-value stores keep essential information organized for quick access, and position encoding helps the model understand word order, just like following a story’s sequence.

LLMs also tap into external memory systems. Think of a high-tech library that learns and adapts.

Memory networks allow for updates, while Neural Turing machines combine neural networks with external memory to read and write data.

Retrieval-augmented generation pulls in relevant information, making interactions richer and more engaging—like having a knowledgeable librarian at your side.

The Learning Process - Adaptation and Growth

BRAIN

Human survival and longevity are deeply rooted in our remarkable ability to adapt and learn in response to changing circumstances. This adaptability is what has allowed us to thrive in diverse environments over thousands of years. Our brains are wired for this purpose, constantly processing information and adjusting to new situations, which is essential for navigating the complexities of life.

Synaptic Plasticity: The Brain’s Adaptation Mechanism

The key to this adaptability is synaptic plasticity, which involves modifying the connections between neurons. We have covered it earlier too but let’s take a look a in-depth look into it.

This process can be broken down into two main types:

•Long-term potentiation (LTP): This occurs when synapses are frequently used, making them stronger and enhancing communication between neurons. LTP is essential for forming memories and learning new skills.

•Long-term depression (LTD): In contrast, LTD weakens connections that are rarely activated, helping the brain prune unnecessary pathways and maintain efficiency.

Through these processes, the brain can store memories and adjust to new experiences, which is crucial for survival. By continually adapting its neural connections, the brain equips us to handle various challenges, learn from our environment, and ultimately thrive over time.

BOTS

Machine ability to adapt is crucial in distinguishing human brains from AI models, with humans excelling in this area thanks to thousands of years of evolution. Recently, the focus on adaptation and learning has surged in AI development, leading to innovative models that can learn from user interactions and prompts.

Parameter Updates

Backpropagation: This method allows AI to learn from mistakes by adjusting its parameters based on errors.

Learning Rate Adaptation: This technique adjusts how quickly the model learns, optimizing training efficiency.

Architecture Adaptation

Neural Architecture Search: This automated process finds the best design for a neural network tailored to specific tasks.

Dynamic Routing: This allows information to flow through various paths based on the input, enhancing adaptability.

Progressive Growing: This method gradually increases the complexity of the network during training, allowing it to learn simpler patterns first.

The ability to reason sets human brains apart from AI. While we’ve got thinkers like Socrates to guide our complex reasoning, AI often struggles with even simple tasks, relying on patterns rather than truly understanding what’s going on..

Emotional Quotient

Emotional Quotient (EQ) is important for how we connect with each other. As social beings, our emotions help us build relationships and understand one another better. Philosophers like Aristotle and Socrates believed that emotions are key to thinking and creativity. The part of our brain that handles emotions, called the limbic system, plays a big role in how we interact. A society with high emotional intelligence leads to stronger relationships and communities.

The question of whether brain-mimicking AI models possess emotions is a fascinating one. While these models are designed to simulate human-like responses and understand emotional contexts, they do not genuinely experience emotions as humans do. Instead, they analyze patterns in data to generate empathetic responses, creating the illusion of emotional understanding.

*Let’s do a small experiment and ask the AI itself to rate its own response based on emotional quotient.*

Prompt used: How would you respond to a friend who is feeling sad and needs support?

Perplexity scored 8/10, noting a lack of personalization and follow-up questions. Claude rated itself 5/5, potentially reflecting overconfidence, but acknowledged the need for more subjective suggestions. GPT-4 also rated itself 8/10, pointing out areas for improvement like memory and emotional depth.

While AI can mimic emotional responses and engage in empathetic dialogue, it remains fundamentally different from human emotional experience. As we continue to develop emotionally intelligent AI, we might just find ourselves in a world where bots can offer comfort—though they’ll never truly feel it. After all, wouldn’t it be amusing if your virtual assistant could send you a “virtual hug” while still being just a bundle of code?

Self taught AI : Unsupervised learning

The rise of self-taught AI is transforming our perspective on how machines can learn, drawing intriguing parallels to the human brain. Unlike traditional AI, which often depends on large amounts of labeled data and supervision, self-supervised learning allows AI systems to explore and understand information on their own. This mirrors the way humans learn through curiosity and experience, enabling these AI models to develop a more intuitive grasp of their environment.

Moreover, the concept of decentralized AI adds another layer to this evolution. By reducing reliance on centralized control and oversight, these systems can learn autonomously, much like how we adapt and grow without constant direction. This lack of supervision encourages flexibility and creativity in AI, pushing us closer to creating machines that think and reason in ways that resemble human cognition.

As we continue to explore these advancements, the boundary between artificial intelligence and human-like understanding becomes increasingly blurred and we’ll move closer to the OG : AGI ( Artificial general intelligence)

As we wrap up this exploration of the fascinating interplay between human cognition and artificial intelligence, it’s clear that both the brain and bots have their unique strengths and quirks. While our brains are masterpieces of evolution, capable of creativity, empathy, and complex reasoning, AI models are speed demons, crunching data at lightning-fast rates.

Imagine a world where your virtual assistant not only answers your questions but also offers a virtual hug—if only it could truly feel! As we inch closer to Artificial General Intelligence (AGI), the dance between silicon and synapses promises to be an exhilarating journey. Who knows? One day, we might just find ourselves asking our AI, “How do you really feel?”

About Cluster Protocol

Cluster Protocol is a decentralized infrastructure for AI that enables anyone to build, train, deploy and monetize AI models within a few clicks. Our mission is to democratize AI by making it accessible, affordable, and user-friendly for developers, businesses, and individuals alike. We are dedicated to enhancing AI model training and execution across distributed networks. It employs advanced techniques such as fully homomorphic encryption and federated learning to safeguard data privacy and promote secure data localization.

Cluster Protocol also supports decentralized datasets and collaborative model training environments, which reduce the barriers to AI development and democratize access to computational resources. We believe in the power of templatization to streamline AI development.

Cluster Protocol offers a wide range of pre-built AI templates, allowing users to quickly create and customize AI solutions for their specific needs. Our intuitive infrastructure empowers users to create AI-powered applications without requiring deep technical expertise.

Cluster Protocol provides the necessary infrastructure for creating intelligent agentic workflows that can autonomously perform actions based on predefined rules and real-time data. Additionally, individuals can leverage our platform to automate their daily tasks, saving time and effort.

🌐 Cluster Protocol’s Official Links: