Decentralized AI: Transforming Access and Execution of Artificial Intelligence

May 18, 2024

5 min Read

*“Artificial intelligence is not a substitute for human intelligence; it is a tool to amplify human creativity and ingenuity.” — Fei-Fei Li🔗Co-Director of the Stanford Institute for Human-Centered Artificial Intelligence and IT Professor at the Graduate School of Business🔗*

As the field of Artificial Intelligence (AI) continues to evolve, several transformative trends are emerging that promise to revolutionize the way we develop, deploy, and scale AI systems. Among these trends, decentralized compute, decentralized datasets, decentralized AI agents, and scalable AI are particularly noteworthy. This blog will delve into these concepts, exploring their unique characteristics, how they differ from traditional centralized approaches, and the potential they hold for the future of AI.

Decentralized Compute: Empowering AI with Distributed Power

Decentralized compute refers to the distribution of computational power across a network of nodes rather than relying on centralized data centers. This approach leverages idle computational resources from a variety of sources, such as personal computers, research machines, and even crypto mining rigs, to create a vast, decentralized network capable of performing intensive computational tasks.

How Decentralized Compute Works:

1. Distributed Network: Nodes in the network contribute their idle computational power, forming a collective resource pool.

2. Task Allocation: Tasks are distributed across the network based on the availability and capability of nodes.

3. Security and Privacy: Data is processed in secure containers, ensuring that sensitive information is protected.

4. Incentives: Contributors are rewarded with tokens or other forms of compensation for providing their computational resources.

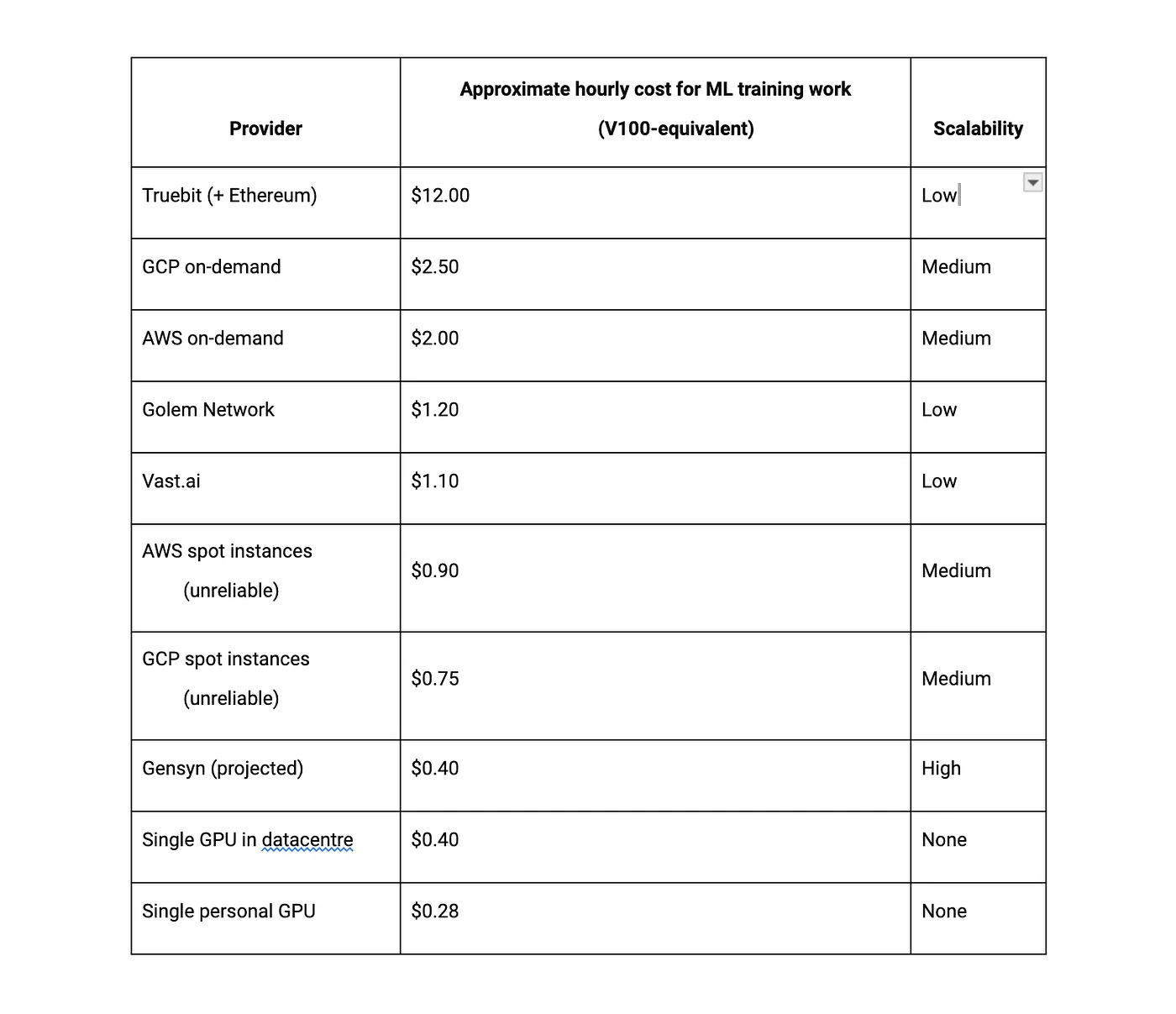

Comparison with Centralized Compute:

A decentralized compute marketplace that provides access to powerful GPU resources at a fraction of the cost of traditional cloud providers like AWS, Google Cloud, and Azure. By utilizing a peer-to-peer network, DePIN projects allows users to rent GPUs with unique configurations, minimizing upfront costs and future-proofing their systems.

Advantages of Decentralized Compute:

• Cost-Effective: Reduces the need for expensive infrastructure investments.

• Scalable: Easily scalable by adding more nodes to the network.

• Resilient: More resilient to attacks and outages due to its distributed nature.

• Environmental Benefits: Utilizes idle resources, reducing the environmental impact of large-scale computations.

Decentralized Datasets: Democratizing Data Access

Decentralized datasets refer to the use of blockchain and other decentralized technologies to manage and distribute data. This approach ensures that data is securely stored and accessed across a distributed network, providing greater security, transparency, and control.

How Decentralized Datasets Work:

Tokenization of Data: Data is tokenized into assets that can be securely traded and accessed on a blockchain.

Secure Access: Access to data is controlled through smart contracts and cryptographic techniques.

Data Monetization: Data providers can monetize their data by offering it on decentralized marketplaces.

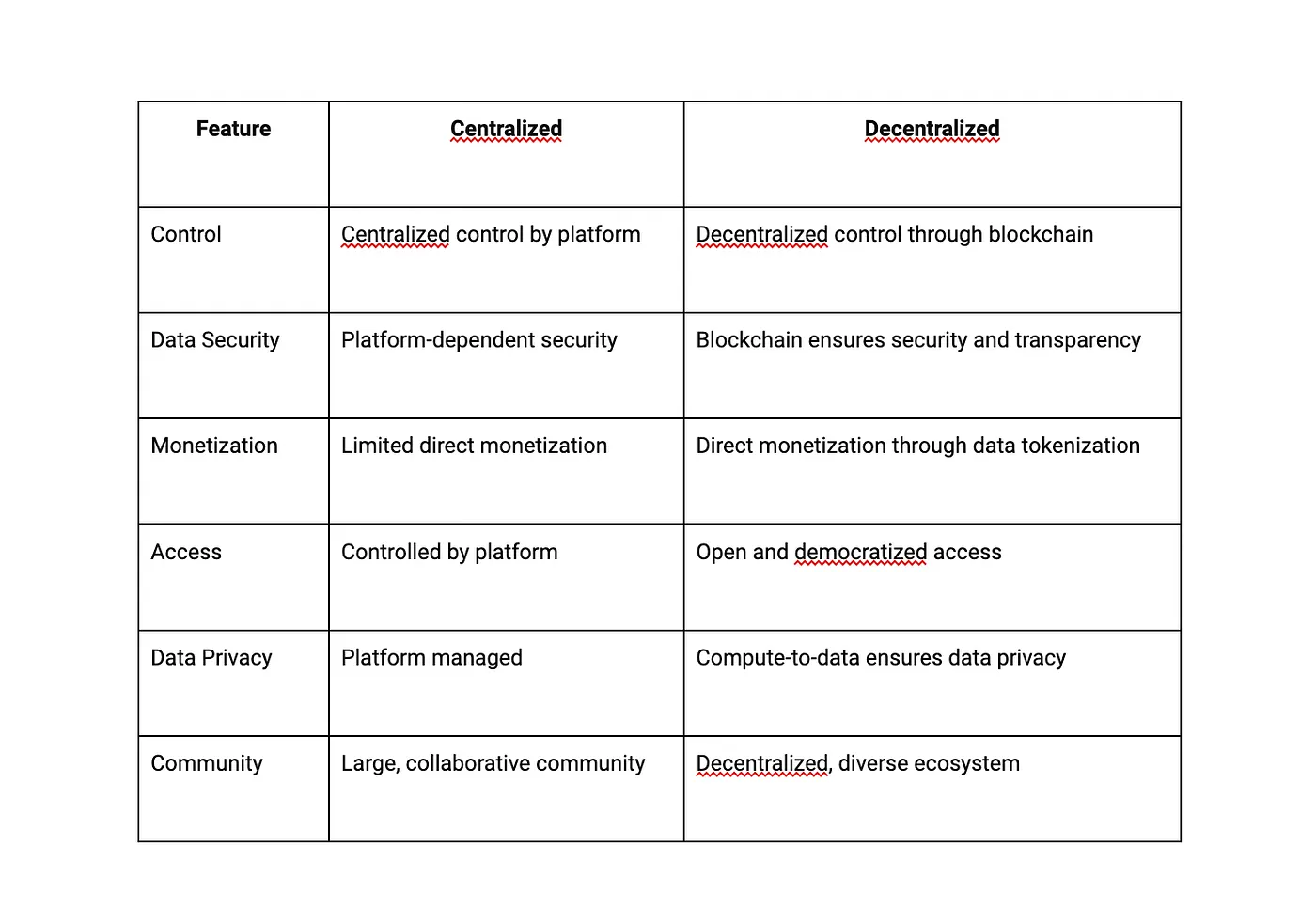

Comparison with Centralized Datasets:

Traditional Platforms: Centralized platforms host large datasets and provide tools for data scientists to work with them. However, these platforms often centralize control and may not offer the same level of security and transparency as decentralized solutions.

Advantages of Decentralized Datasets:

Enhanced Security: Data is encrypted and distributed across multiple nodes, reducing the risk of breaches.

Increased Transparency: Blockchain technology ensures that all transactions and data access are transparent and verifiable.

Monetization Opportunities: Data providers can directly monetize their datasets, creating new revenue streams.

Data Sovereignty: Providers retain control over their data, deciding how and by whom it is used.

Decentralized AI Agents: The New Era of Intelligent Systems

Decentralized AI agents are intelligent systems that operate on decentralized networks rather than centralized servers. These agents can perform a wide range of tasks, from executing smart contracts and trading cryptocurrencies to providing personalized services and conducting complex data analysis.

How Decentralized AI Agents Work:

Blockchain Technology: Utilizes distributed ledgers and smart contracts to automate processes and ensure transparency.

Decentralized Storage: Data is stored across multiple nodes, ensuring redundancy and security.

Inter-Agent Communication: Agents communicate directly with each other, sharing information and resources.

AI Algorithms: Employ machine learning and natural language processing to understand and respond to user inputs.

Comparison with Centralized AI Systems:

• Centralized AI: Companies like OpenAI and Google run centralized AI models, which can limit access and control over data and AI capabilities.

Advantages of Decentralized AI Agents:

• Autonomy: Operate independently and make decisions based on pre-set algorithms and user inputs.

• Interconnectivity: Communicate and collaborate with other agents across the network.

• Security: Utilize encryption and decentralized storage to protect data and operations.

• Scalability: Easily scalable by adding or removing nodes in the network.

Scaling AI: Achieving Operational Excellence

Scalable AI is the ability to use machine learning algorithms and generative AI services to accomplish tasks at a pace that meets business demands. It requires infrastructure, data volumes, and human expertise to operate at the speed and scale required.

Key Components of Scalable AI:

Data Management: Integrated and complete data from various parts of the business.

Infrastructure: Heavy-duty computing power and networks to support AI models.

Human Expertise: Skilled data scientists and IT professionals to manage and optimize AI operations.

MLOps: Tools and processes for building, training, deploying, and monitoring AI models.

Challenges in Scaling AI:

• Data: Acquiring, organizing, and analyzing large datasets.

• Processes: Iterative collaboration between business experts, IT teams, and data scientists.

• Tools: A diverse set of tools for data management, model building, and AI operations.

• Talent: Scarcity of skilled AI professionals.

• Scope: Balancing the size and complexity of AI initiatives.

• Time: The lengthy process of developing and deploying AI systems.

Importance of Scalable AI:

• Efficiency: Enhances operational speed and accuracy.

• Innovation: Drives competitive advantages and fosters innovation.

• Productivity: Improves customer satisfaction and workforce productivity.

• Resource Utilization: Optimizes the use of assets and resources.

Integrating Decentralized and Scalable AI

Combining decentralized compute, datasets, and AI agents with scalable AI practices can create a robust and resilient AI ecosystem. Here’s how these elements work together:

1. Decentralized Compute: Provides the computational power needed to run AI models efficiently and cost-effectively.

2. Decentralized Datasets: Ensures secure and transparent access to high-quality data for training and deploying AI models.

3. Decentralized AI Agents: Enhances the autonomy and interconnectivity of AI systems, enabling more complex and innovative applications.

4. Scalable AI Practices: Facilitates the integration and optimization of AI systems across the organization, ensuring they can meet business demands and drive value.

The Combined Impact:

• Security and Privacy: Enhanced protection of data and AI operations through decentralized technologies.

• Cost-Effectiveness: Reduced infrastructure and operational costs.

• Scalability and Resilience: Robust and adaptable AI systems that can scale to meet growing demands.

• Innovation and Accessibility: Democratized access to AI capabilities, fostering innovation and empowering users.

The Future with Cluster Protocol:

By integrating decentralized compute, datasets, AI agents, and scalable AI practices, Cluster Protocol is creating a comprehensive ecosystem that empowers users to build, deploy, and scale AI applications seamlessly.

This approach not only enhances the efficiency and security of AI operations but also democratizes access to cutting-edge AI capabilities, fostering innovation and driving the future of AI development.

In conclusion, the integration of decentralized compute, datasets, AI agents, and scalable AI practices represents a significant leap forward in the field of artificial intelligence. Cluster Protocol is leading this charge, providing the infrastructure and tools necessary to create a robust, secure, and scalable AI ecosystem.

The future of AI is decentralized, scalable, and incredibly promising, and Cluster Protocol is at the forefront of this exciting transformation.

🌐 Cluster Protocol Official Links: