The Evolution of Decentralized AI Agents: A Comprehensive Review

Oct 24, 2024

12 min Read

How did it all start?

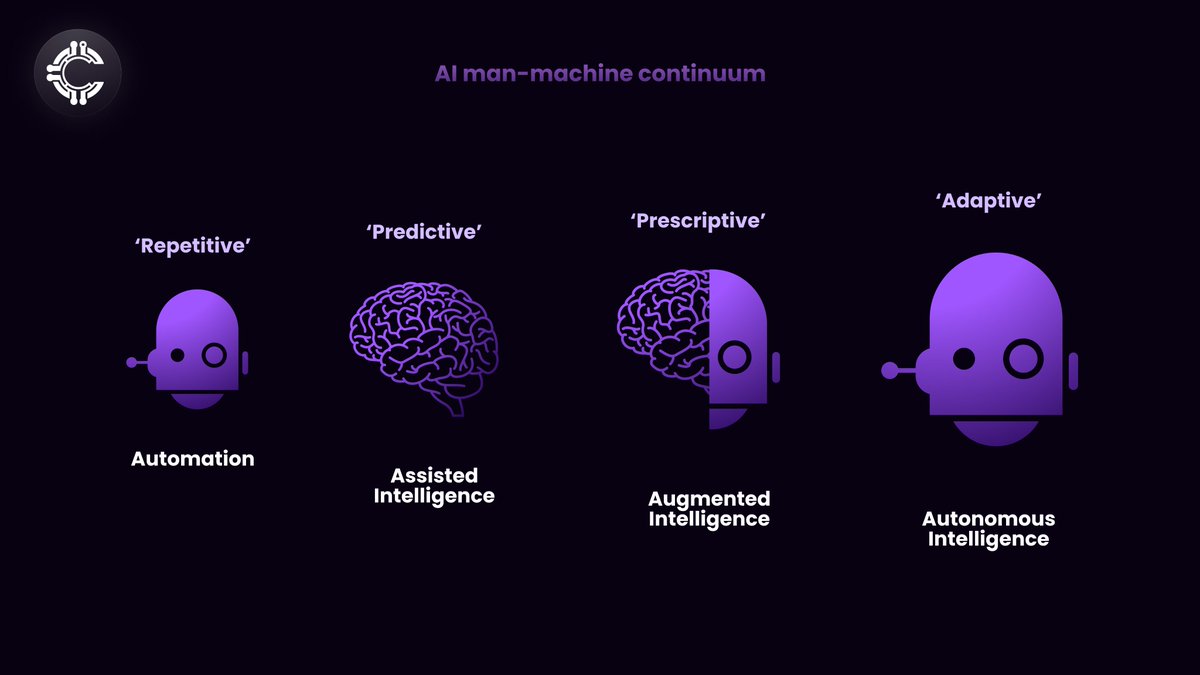

When we talk about AI, we often look back at how humans have always tried to find easier ways to do things. The Industrial Revolution and electricity gave us ways to automate work. Over centuries the urge to automate processes to achieve a result with less effort has become part of human nature.

Automation is like teaching a machine to do a specific task over and over again, always using the same things and getting the same results. It's like following a recipe – if you change a step, the dish will be different.

The problem with automation is that machines are usually built to do one thing and might have trouble adjusting to changes.

AI, or Artificial Intelligence, is like a computer program that can think and solve problems in a way that mimics human intelligence. Unlike traditional software, which follows strict instructions, AI can adapt and learn from its experiences. This means it can improve its performance over time, making it more versatile and capable of handling complex tasks. AI can process information, recognize patterns, and even make decisions, much like a human would.

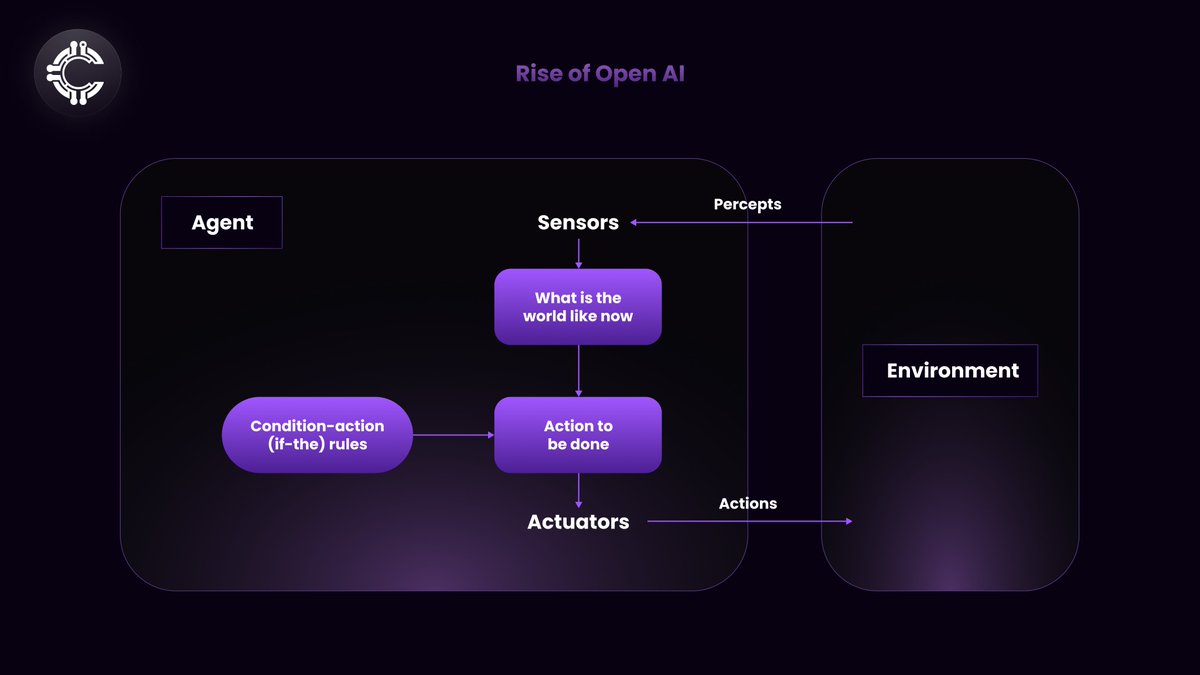

What's an AI agent?

It's a combination of AI and automation. These virtual machines can do many things, just like humans, and can handle different things. They're trained on a lot of data and can do all sorts of tasks, from helping doctors to creating content and even chatting with customers for specific brands.

Rise of Open AI

Remember when OpenAI burst onto the scene with GPT-3? It felt like a turning point. Suddenly, AI wasn't just for scientists anymore. It was accessible, powerful, and capable of generating human-quality text. The hype was real. Everyone was talking about AGI – the holy grail of AI that could understand, learn, and apply knowledge like a human.

OpenAI, founded in 2015, quickly gained prominence with its groundbreaking research and development in AI with the release of GPT-3, a massive language model with billions of parameters, in 2020, solidifying its position as a leader in the field. The model's ability to generate coherent and informative text, even when prompted with incomplete or ambiguous questions, sparked widespread excitement and speculation about the future of AI.

AI Agents can be likened to individuals possessing a specific set of skills, where each skill involves mastering a particular set of tools.

Early LLMs were limited to solving simple math problems or generating text on familiar topics. These models used fixed workflows, which, while efficient, constrained their inherent intelligence.

Let's explore the differences between fixed and dynamic workflows.

Fixed Workflows: A Controlled Approach

Fixed workflows provide a structured environment for AI development, focusing on accuracy and reliability. By following a predefined sequence, developers can ensure high-quality training and rigorous evaluation. However, the controlled nature of fixed workflows can limit the model's ability to adapt to new situations or learn from unexpected challenges.

Dynamic Workflows: A Flexible Approach

Dynamic workflows offer a more flexible approach to AI development, allowing for experimentation, exploration, and continuous improvement. This collaborative approach can foster innovation and lead to more powerful AI models. Dynamic workflows can also foster a more collaborative environment, encouraging teams to work together and share ideas.

It’s the universal balance between Power and Intelligence.

AGI Hype: The Holy Grail of AI

Imagine building a super-intelligent AI. It's like building a skyscraper – you need a solid foundation, but you also need to be prepared for unexpected challenges. That's where the balance between fixed and dynamic workflows comes in: AGI or Artificial General Intelligence.

In the past, LLMs like Chat GPT and Gemini (previously Bard) have quietly transitioned from stand-alone assistants to nearly autonomous agents capable of outsourcing tasks and adjusting their behavior in response to inputs from their environment or themselves. These new quasi-agents can now run applications to search the web or solve math problems, and even act on user prompts to check and correct their work and closing in to achieve true AGI.

A big moment in AI history is going to be when AI starts to get common sense. Achieving AGI or human-like intelligence is an ongoing task to which we are coming closer with each upgrade.

Let’s get to the trinity of AGI

Neural Network: Neural networks are computer systems that learn from data by imitating the human brain's structure. They use interconnected nodes to process information layer by layer. Deep neural networks, like recurrent neural networks (RNNs) and transformers, have been incredibly successful in tasks like understanding language, recognizing images, and more. These models can even be scaled to a level similar to human intelligence.

Cognitive Architectures: Cognitive architectures aim to model human cognition by combining various components, such as perception, memory, reasoning, and learning. By integrating these components into a unified framework, researchers hope to create AI systems that can exhibit human-like intelligence.

Symbolic AI: Symbolic AI focuses on representing and manipulating knowledge using symbols and logical rules. While symbolic AI has limitations in dealing with unstructured data, it can be combined with neural networks to create hybrid systems that are more capable of reasoning and planning. Symbolic AI is like a computer program that uses rules and symbols to think and reason.

By combining Symbolic AI, Cognitive Architectures, and Neural Networks, researchers hope to create AGI systems that can:

1. Reason and plan: Symbolic AI can provide the logical framework for reasoning and planning.

2. Learn from experience: Neural networks can enable AGI systems to learn from data and adapt to new situations.

3. Understand the world: Cognitive architectures can help AGI systems develop a deeper understanding of the world and its complexities.

While each of these components has its strengths and weaknesses, a synergistic approach that uses the best of all three may achieve true AGI. By combining the power of symbolic reasoning, the flexibility of cognitive architectures, and the learning capabilities of neural networks, researchers may be able to develop AI systems that can truly rival human intelligence in the near future.

As the saying goes, the journey is often as important as the destination. Reaching AGI is a significant milestone, but the challenges and potential consequences of its underlying architecture don't stop there.

Privacy First: Federated Learning in AI

The biggest hurdle in AI development has always been data privacy. Companies that train these models often hold exclusive ownership of the training data, raising concerns about how this data might be used in the future. This creates a significant barrier to progress in AI development.

To address this issue, federated learning emerged as a solution. By decentralizing the training process and keeping data local to devices, federated learning offers a powerful shield for data privacy in AI development.

Federated learning: First step towards decentralization

Remember the Facebook scandals a few years back? Mark Zuckerberg had to face the music in front of both US and European lawmakers over privacy breaches. The public was outraged, and strict rules were put in place to protect our private data. It was like a wake-up call for big tech companies, forcing them to rethink how they handled our information.

While the new privacy rules were a step in the right direction, they also had an unintended consequence: they made it harder for companies to train their AI models. AI needs a lot of data to learn, and with stricter privacy rules, it was becoming like trying to bake a cake without any ingredients. The public data available for AI training was limited and was expected to run out by 2026, raising concerns about how future generations of AI models would be trained.

That's when a new idea called "federated learning" came along. The term "federated learning" was indeed introduced by Google in 2016, but it wasn't solely in response to the data privacy scandals involving major tech giants like Facebook. While privacy concerns grew, federated learning was a natural development in machine learning and AI development.

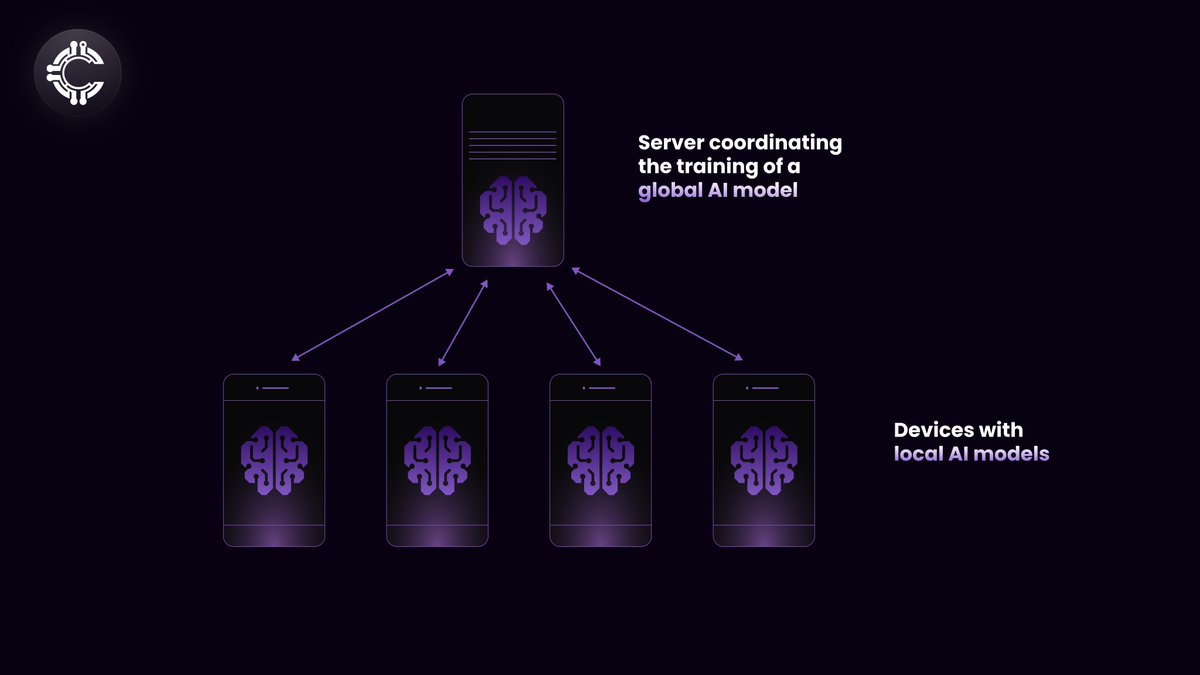

What is federated Learning?

Federated learning is a way to train AI models without anyone seeing or touching your data. Before it, AI models were trained on data scrapped from the web or contributed by consumers in exchange for free email, music, and other perks called cookies. But now AI training is shifting towards a more decentralized way.

By processing data at their source, federated learning also offers a way to tap the raw data streaming from sensors on satellites, bridges, machines, and a growing number of smart devices at home and on our bodies without ever revealing that data to the node training the data.

How Federated Learning Works

Initialization: A central server distributes a pre-trained foundation model to participating clients or devices.

Local Training: Each client trains the model on their private data, updating the model's parameters based on the local data.

Model Aggregation: Clients send their updated model parameters to the central server.

Global Model Update: The central server aggregates the updates from all clients, combining them into a single, improved global model.

Iteration: The process repeats, with clients downloading the updated global model and training on their local data again, further refining the model's performance.

Deep learning models require vast amounts of training data to make accurate predictions. However, companies in heavily regulated industries, such as healthcare, are reluctant to share sensitive data for AI development due to privacy concerns and potential risks. However federated learning offers a solution by allowing organizations to collaboratively train a decentralized model without sharing confidential data. Aggregating medical data, such as lung scans and brain MRIs, at scale, could lead to great advancements in disease detection and treatment, including cancer.

Limitation of Federated learning

While federated learning offers a significant improvement in data privacy, it's not entirely immune to attacks. It is most vulnerable when the exchange of model updates between data hosts and the central server.

Each iteration of this process increases the model's performance but also exposes the underlying training data to potential inference attacks. This means that attackers could potentially extract sensitive information from the model, even without direct access to the raw data.

There are more limitations to federated learning -

1. Trust - Not everyone who contributes to the model may have good intentions. Some may use the data for their benefit putting private data at risk.

2. Single node - if data is deleted, the parties are obligated to retrain the model from scratch. No other copy exists!!

3. Lack of transparency - Private data used for training AI models can be full of biases and fake information and with federated learning that data is kept private, so there is no way to test the accuracy, fairness, and potential biases in the model’s outputs.

Decentralized AI and web3

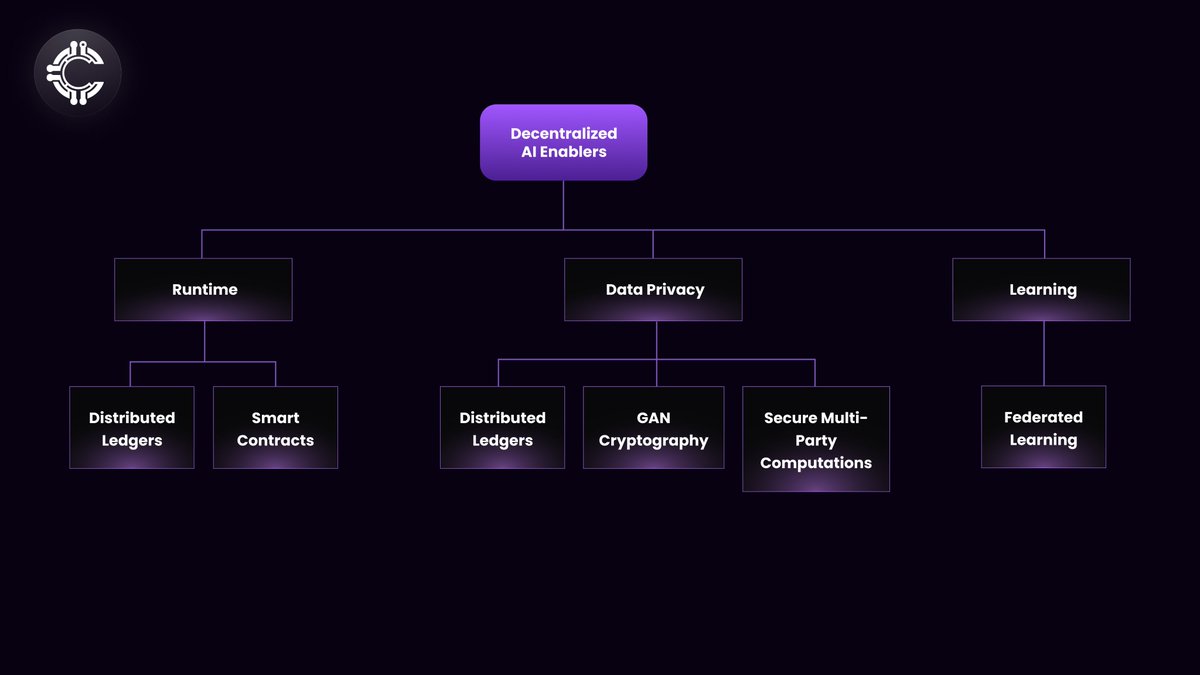

On looking closely we may find that the limitations of federated learning, such as trust concerns and lack of transparency, often align with the strengths of Decentralized AI on blockchain.

But what is a Decentralized AI model?

A decentralized AI model is an AI system that is not controlled or managed by a single entity. Instead, it is distributed across a network of nodes, each with its own computing power and data storage, allowing for greater autonomy, resilience, and fairness compared to traditional centralized AI models.

- [The global artificial intelligence (AI) market size is expected to reach $2,575.16 billion by 2032.](https://www.precedenceresearch.com/artificial-intelligence-market)

- [Blockchain artificial intelligence market size is expected to hit $973.6 million in 2027.](https://www.fortunebusinessinsights.com/blockchain-ai-artificial-intelligence-market-104588)

Instead of relying on a single, massive corporation to make decisions, power is distributed among a network of interconnected nodes. The nodes could interact with each other, share data, and collaborate to achieve complex objectives.

How does Decentralized AI work?

Decentralized AI systems function as a network of interconnected nodes, each equipped with its own processing power and data storage. These nodes work together to train and use AI models in a distributed fashion, eliminating the need for a central controller.

Here's a breakdown of how decentralized AI works:

Data Distribution: Data is distributed across the network of nodes, ensuring that no single entity has access to all the data. This helps protect data privacy and security.

Model Training: AI models are trained on the local data of each node.

Model Aggregation: The trained models are aggregated and combined to create a more powerful global model. Federated learning is used for this process, where only model updates are shared, not the raw data.

Inference and Decision-Making: Once the global model is trained, it can be used for inference and decision-making. This can be done on any node in the network, or even on devices connected to the network.

Incentivization: Decentralized AI systems often use incentives to encourage nodes to participate in the network and contribute their resources. These incentives can be in the form of tokens, rewards, or other benefits.

Data Privacy Superhero: Decentralized AI

While sharing data with multiple parties for AI training might initially seem like a privacy risk compared to centralized AI, decentralized AI offers a robust solution.

Unlike traditional centralized AI models, which store data in a single location, decentralized AI distributes data across a network, reducing the vulnerability to unauthorized access and single points of failure.

1. Distributed data storage: As the training data storage data is distributed, there is no single source of sensitive data, reducing the risk of data breaches.

2. Secure Multi-Party Computations (SMPC): Multiple parties can jointly compute a function over their private inputs without revealing the individual inputs. This enables collaborative AI development while protecting the privacy of each party's data.

3. Homomorphic Encryption: Homomorphic encryption allows computations to be performed on encrypted data without decrypting it first. This means that AI models can learn and work with secret data without anyone knowing what that data is. This keeps the data safe the whole time.

Future of information: Decentralized AI

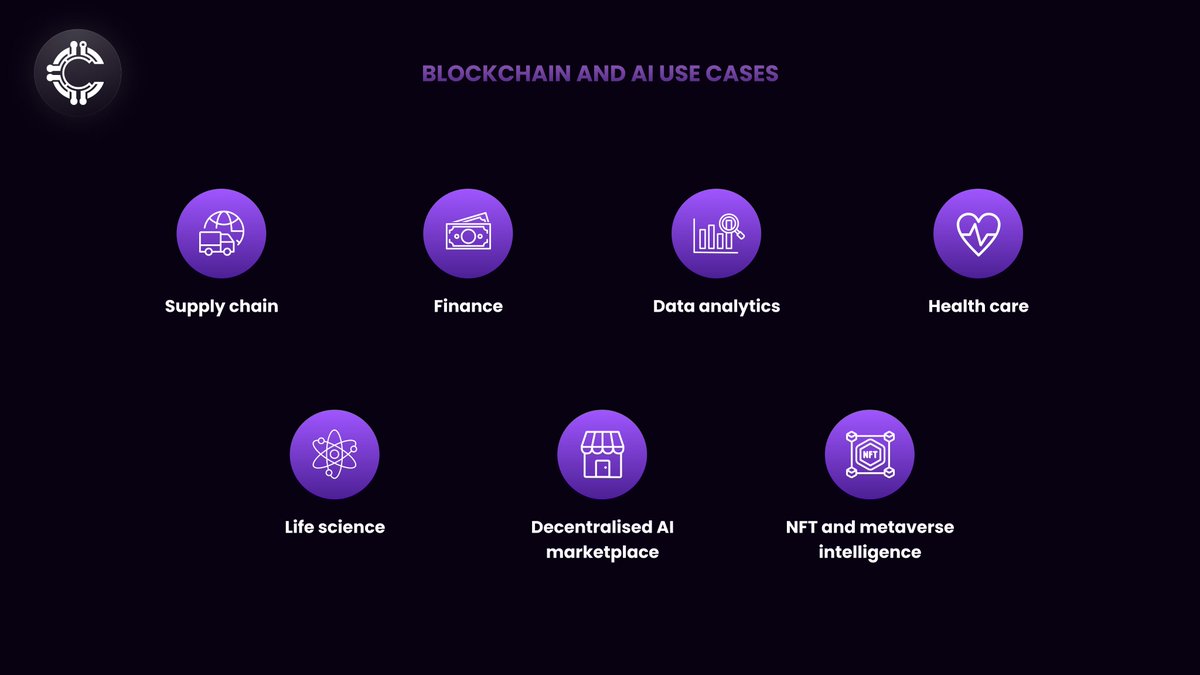

1. AI Agents Marketplace: A decentralized marketplace for AI agents is like a store where you can find specialized AI agents for different tasks. Users could choose from various agents tailored to specific needs, ensuring flexibility and efficiency.

2. Specialized Task Execution: Decentralized AI agents could be trained efficiently for specialized tasks, such as financial analysis, legal research, or medical diagnostics and process vast amounts of data, generate reports, and provide expert-level insights.

3. Personalized Search and Information Retrieval: Imagine AI assistants who know your interests and what you're looking for. They could find you exactly what you need, even if you don't know the right words to search for. Decentralized AI agents can be trained on users' private data to act as personal search experts.

4. Collective Intelligence: Multiple AI models can interact with each other to share information and data making them highly interoperable and efficient for training.

5. Reduced Centralized Control: Since these AI agents are decentralized, they wouldn't be controlled by a few big companies. This would make information and resources more fair and accessible to everyone leading to new and exciting ideas for using AI in different ways.

AI + Decentralization = Future of Humankind / Conclusion

The journey from early AI to the rise of decentralized AI agents has been nothing short of remarkable. From the early days of automation to the breakthroughs in language models like GPT-3, AI has come a long way. While the allure of AGI continues to captivate researchers and enthusiasts, the decentralized approach offers a promising path forward.

As we saw in the beginning, AI started as a way to automate tasks, but now it's evolving into something much more powerful and flexible.

By combining the power of AI with the principles of decentralization, we can create a future where AI is more accessible, secure, and aligned with our values. Decentralized AI agents have the potential to revolutionize industries, from healthcare and finance to education and entertainment. As we continue to explore the possibilities of this technology, it is essential to prioritize privacy, transparency, and ethical considerations to ensure a beneficial future for all.

About Cluster Protocol

Cluster Protocol is a decentralized infrastructure for AI that enables anyone to build, train, deploy and monetize AI models within a few clicks. Our mission is to democratize AI by making it accessible, affordable, and user-friendly for developers, businesses, and individuals alike. We are dedicated to enhancing AI model training and execution across distributed networks. It employs advanced techniques such as fully homomorphic encryption and federated learning to safeguard data privacy and promote secure data localization.

Cluster Protocol also supports decentralized datasets and collaborative model training environments, which reduce the barriers to AI development and democratize access to computational resources. We believe in the power of templatization to streamline AI development.

Cluster Protocol offers a wide range of pre-built AI templates, allowing users to quickly create and customize AI solutions for their specific needs. Our intuitive infrastructure empowers users to create AI-powered applications without requiring deep technical expertise.

Cluster Protocol provides the necessary infrastructure for creating intelligent agentic workflows that can autonomously perform actions based on predefined rules and real-time data. Additionally, individuals can leverage our platform to automate their daily tasks, saving time and effort.

🌐 Cluster Protocol’s Official Links: