Unraveling the Magic: How Large Language Models (LLMs) Shape Our Digital Conversations

Apr 6, 2024

9 min Read

*Imagine you’re in the midst of a lively online conversation. The responses are swift, the dialogues are coherent, and every once in a while, a humorous quip is thrown in. The catch? You’re conversing with a computer program, not a human being. Far from science fiction, this is the present reality made possible by Large Language Models (LLMs) such as OpenAI’s GPT-4.*

## What Exactly Are Large Language Models? ##

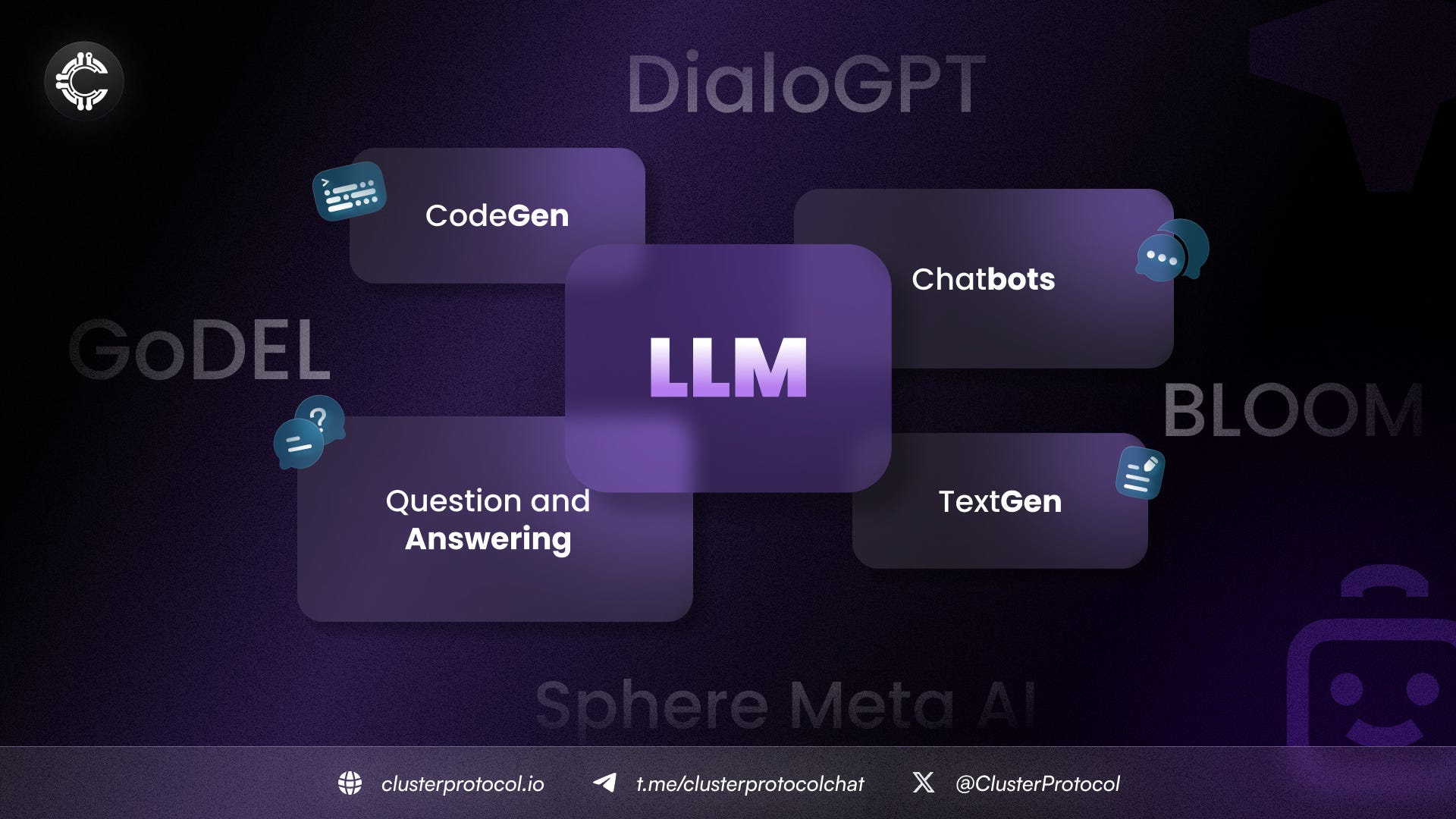

At their core, Large Language Models (LLMs) are a form of artificial intelligence, designed to generate text. They are remarkably versatile, capable of composing essays, answering questions, and even creating poetry. The term ‘large’ in LLMs refers to both the volume of data they’re trained on and their size, measured by the number of parameters they possess. Imagine these models as voracious readers, devouring all the books in a vast digital library like Wikipedia or Google Books. They learn from this massive textual buffet, absorbing patterns, nuances, and linguistic structures.

## How Do LLMs Work? ##

Picture yourself learning a new language. You read books, listen to conversations, and watch movies. As you absorb information, you recognise patterns — how sentences are structured, the meanings of different words, and even subtle nuances like slang or idioms. LLMs work similarly. They start by studying tons of text data — books, articles, web content. This is their ‘schooling,’ where they learn the intricate connections between words and phrases. Deep learning, their educational process, involves teaching themselves about language based on the patterns they identify in the data.

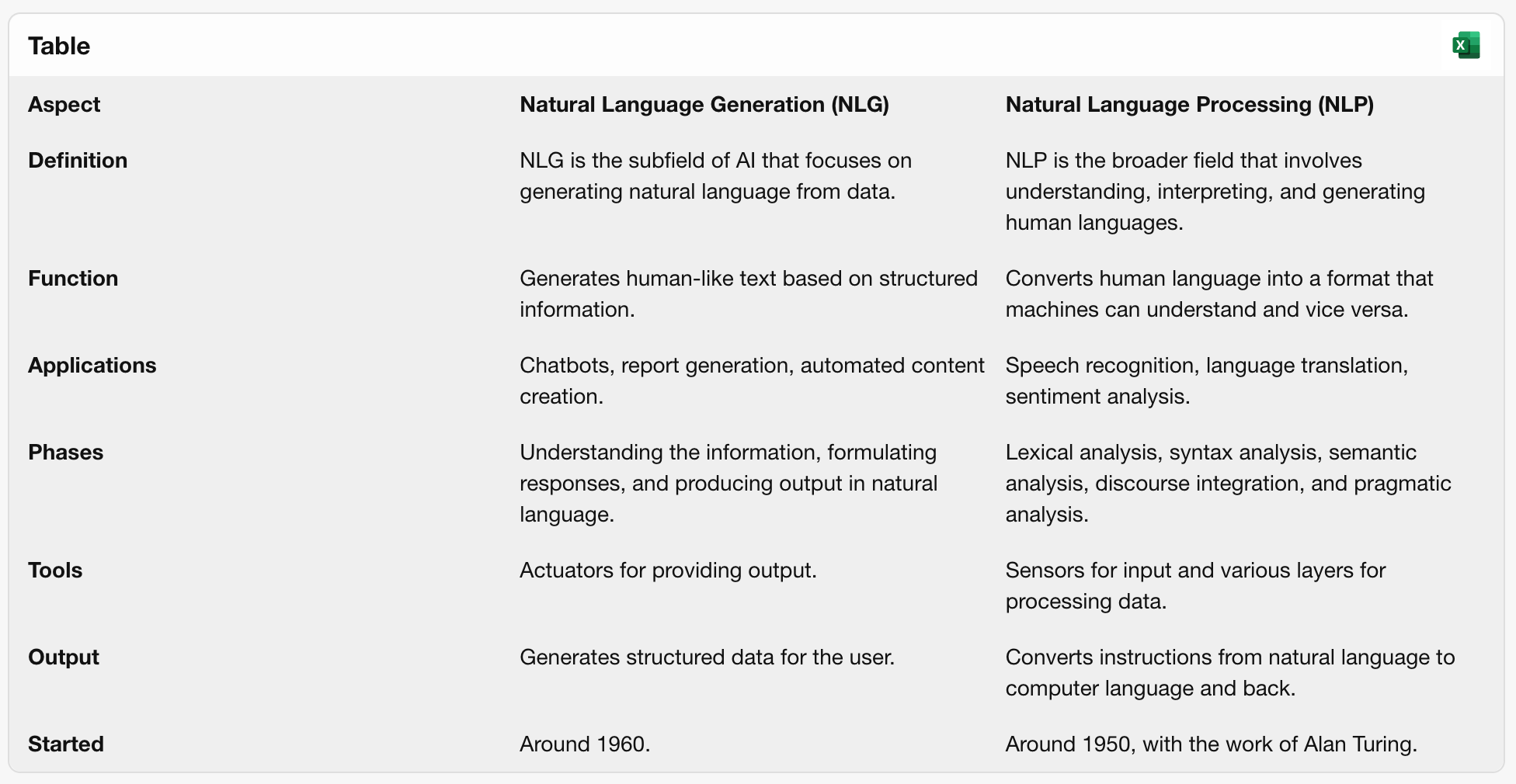

Once ‘schooled,’ LLMs are ready to generate new content. They use something called natural language generation (NLG). When faced with an input (like a question or a sentence), they draw upon their learning to craft a coherent response. It’s like having a conversation with a well-read friend who can conjure up witty remarks or insightful insights.

At its core, NLG is a software process driven by AI. Its mission? To transform structured and unstructured data into human-readable language. Imagine NLG as a digital wordsmith, adept at translating complex information into sentences that resonate with us — much like a skilled writer crafting an engaging article or a friendly chatbot responding to our queries.

Here are the key points about NLG:

1. Input and Output: NLG takes data as input — this could be anything from statistical figures to database entries. It then weaves this raw material into coherent, understandable text. The output can be in the form of written paragraphs, spoken sentences, or even captions for images.

2. Applications of NLG:

* Automated Reporting: NLG generates various reports, such as weather forecasts or patient summaries.

* Content Creation: It’s the magic behind personalized emails, product descriptions, and blog posts.

* Chatbots and Virtual Assistants: Ever chatted with a helpful bot? NLG powers their conversational abilities.

* Data Insights: NLG distills complex data into digestible narratives.

3. How NLG Works:

* Structured Data: NLG starts with structured data — facts, numbers, or templates. For instance, consider pollen levels in different parts of Scotland. From these numbers, an NLG system can create a concise summary of pollen forecasts.

* Grammar and Context: NLG systems understand grammar rules, context, and linguistic nuances. They decide how to phrase information to make it sound natural.

* Machine Learning: Some NLG systems learn from large amounts of human-written text. They use statistical models to predict the best way to express a given idea.

4. Challenges and Complexity:

* Ambiguity: Human languages allow for ambiguity and diverse expressions. NLG must navigate this complexity.

* Decision-Making: NLG chooses specific textual representations from many possibilities.

* Complementary to NLU: While Natural Language Understanding (NLU) disambiguates input, NLG transforms representations into words.

## From ELIZA to Today: NLG’s Evolution ##

NLG has come a long way since the development of ELIZA in the mid-1960s. Commercial use of NLG gained momentum in the 1990s. Techniques range from simple template-based systems (like mail merges) to sophisticated models with deep understanding of human grammar.

So next time you read a weather report, receive an automated email, or chat with a friendly AI, remember that NLG is the invisible hand behind those words — a bridge connecting data and language.

ELIZA is an early natural language processing computer program that was developed from 1964 to 1966 at MIT by Joseph Weizenbaum. It’s known for being one of the first chatbots and for its ability to simulate conversation by using a pattern matching and substitution methodology. This gave users the illusion that the program understood the conversation, though it didn’t have any built-in understanding of the content it was processing.

The most famous script used by ELIZA was called DOCTOR, which simulated a Rogerian psychotherapist, essentially mirroring the user’s statements back to them. Despite its simplicity, ELIZA was able to convince some users that it genuinely understood their messages, leading to what is now known as the “ELIZA effect” — the tendency to attribute human-like understanding to computer programs🔗.

## Why Should You Care About LLMs? ##

LLMs are more than just linguistic acrobats. Their advanced comprehension of language enables a diverse array of applications:

1. Conversational AI: Think Amazon’s Alexa or Apple’s Siri. LLMs power these chatbots, answering queries and engaging in coherent conversations.

2. Content Creation: They can generate human-like text, from product descriptions to blog posts.

3. Translation: LLMs bridge language gaps, making communication seamless.

4. Sentiment Analysis: They gauge emotions in text, helping businesses understand customer feedback.

## How LLMs Embrace Decentralization? ##

1. Core Philosophy: LLMs, such as Chat GPT and BERT, adhere to decentralization principles:

* Autonomy: They function independently, capturing complex relationships in text.

* Transparency: Open networks allow anyone to participate.

* Censorship Resistance: LLMs operate without central control.

* Privacy: They respect user privacy.

2. Architecture and Parameters:

* LLMs consist of layers like feedforward, embedding, and attention layers.

* They learn intricate patterns during training from diverse language data.

* Their size has grown exponentially: GPT-1 (117 million parameters), GPT-2 (1.5 billion parameters), and GPT-3 (175 billion parameters).

Imagine you have a huge coloring book with lots of pictures. The first coloring book (GPT-1) has 117 pictures to color. That’s like the number of crayons you have to color with. The second book (GPT-2) is much bigger, with 1,500 pictures. And the third one (GPT-3) is super huge, with 175,000 pictures!

Now, think of each picture as a ‘parameter,’ which is a special helper that the AI uses to learn and understand things. Just like the more pictures you have, the more you can color and create, the more parameters the AI has, the better it can learn and understand what you’re saying. So, GPT-3 is like having a gigantic coloring book that makes the AI super smart because it has so many pictures (parameters) to learn from! 🎨✨

3. Achieving Decentralization:

* Miners: In public blockchains, miners validate transactions, maintaining decentralization.

* Open Access: Anyone with an internet connection can participate.

* Semantic and Syntactic Understanding: LLMs generate text using the semantic and syntactic rules of a language.

Parameters in models like GPT-3 are essentially the learned weights that determine the output given a particular input. They are the result of the training process where the model learns from vast amounts of data. Here are a few examples of parameters and their uses:

* Attention Weights: These parameters help the model decide which parts of the input are important when generating a response. For example, in a sentence, the model might learn to pay more attention to nouns or verbs to understand the main action or subject.

* Word Embeddings: These are vectors that represent words in a high-dimensional space. They allow the model to understand the meaning of words based on their context. This is useful for tasks like translation or summarizing, where the meaning of words in relation to others is crucial.

* Layer Weights: Each layer in a neural network has its own set of parameters. These weights help in transforming input from one form to another, capturing complex patterns in data. They are essential for the model to make accurate predictions or generate coherent text.

* Bias Terms: These parameters are used to adjust the output of a neuron in the network, ensuring that the model has flexibility in learning patterns. They can be critical for fine-tuning the model’s performance on specific tasks.

These parameters work together to help the AI understand and generate human-like text, making it useful for a wide range of applications like writing assistance, conversation, and even creating code. 🤖✍️

Large Language Models (LLMs) play a pivotal role in decentralized systems, revolutionizing how we interact with technology. Let’s explore their applications and impact:

1. Collaborative and Privacy-Preserving Training:

* In decentralized settings, data privacy is crucial. LLMs can be trained collaboratively on distributed private data using techniques like federated learning (FL).

* Multiple data owners participate in training without transmitting raw data. This preserves privacy while enhancing LLM performance1🔗.

2. Unlocking Distributed Private Data:

* Decentralized systems often have underutilized private data scattered across various sources.

* LLMs can tap into this distributed data, improving their understanding and generating more accurate responses.

3. OpenFedLLM: A Framework for Collaborative Training:

* OpenFedLLM is a research-friendly framework that facilitates collaborative LLM training via FL.

* It covers instruction tuning, value alignment, and supports diverse domains and evaluation metrics1🔗.

4. Performance Boost via Federated Learning:

* Experiments show that all FL algorithms outperform local training for LLMs.

5. Decentralized LLMs and Blockchain:

* LLMs can be integrated into blockchain networks, ensuring trustless, transparent language processing.

6. Challenges and Opportunities:

* Decentralized LLMs face resource constraints (CPU, GPU memory, network bandwidth) but hold immense potential.

In summary, LLMs empower decentralized systems by bridging privacy, collaboration, and performance. As we embrace this digital renaissance, LLMs continue to shape our collective intelligence — one algorithm at a time.

## The Promises of Decentralized AI ##

Now, let’s pivot to the fascinating world of decentralized AI. Imagine distributing the control over LLMs, rather than concentrating it in the hands of a few organizations. This decentralization mitigates the potential for overarching influence by any single entity. Here’s why it matters:

1. Democratization: Decentralized AI dismantles exclusivity. It’s an age where big data analytics and AI tools are shared, not hoarded. Knowledge and power disperse across the many.

2. Checks and Balances: Decentralization limits mass surveillance and manipulation. No single entity can wield AI against citizen interests on a massive scale.

3. Innovation: By preventing monopolization, decentralized AI fosters innovation. It’s an open arena where diverse applications thrive.

As we stand on the brink of a new era in digital communication, Large Language Models like GPT-4 are not just tools; they are the architects of a paradigm shift in human-computer interaction. They challenge our preconceived notions of creativity and understanding, blurring the lines between human and machine intelligence. As these models continue to evolve, they promise to expand the horizons of possibility, transforming vast oceans of data into meaningful, human-like dialogue. The magic of LLMs lies not in their ability to mimic human conversation but in their potential to enrich it, opening doors to new realms of exploration in the digital space. The future of LLMs is not just about technological advancement; it’s about redefining the essence of connection in our increasingly digital world.

Cluster Protocol is part of the exciting journey of AI. It allows everyone to help improve the way we interact with digital technology by training Large Language Models. Your knowledge and skills can help make AI smarter. We aim to make AI development open to everyone, not just a select few. This means that a variety of voices from around the world can influence the future of technology. By working together, we can use the power of this shared approach to create an AI that reflects the diversity of its users.

Connect with us

Website: [https://www.clusterprotocol.io](https://www.clusterprotocol.io/)

Twitter: [https://twitter.com/ClusterProtocol](https://twitter.com/ClusterProtocol)

Telegram Announcements: [https://t.me/clusterprotocolann](https://t.me/clusterprotocolann)

Telegram Community: [https://t.me/clusterprotocolchat](https://t.me/clusterprotocolchat)